How Computers make Random Numbers

In software, and in engineering in general, there is necessity for reproducible randomness — numbers and images that seem random, that look and feel random, but are actually generated using definite algorithms. This is called pseudo-randomness, and we’ll be taking a closer look at simple ways to make pseudo random numbers. By the end of the article, we’ll have formulated a simple theorem for producing these seemingly random numbers.

Defining what exactly randomness is can be a challenging task. There are some tests (Kolmogorov complexity for example), that can give you a proper mathematical value for how random a sequence is, but for our case, we won’t bother. We will simply be trying to produce a sequence that has numbers following a seemingly unrelated pattern.

Often, the requirement is not just a single number, but several random ones, generated continuously. Hence, given a starting value, we need to way to produce more values. This starting value is called the seed , and we’ll see how to get the seed later. For now, let’s focus on generating a set of numbers.

Generating Random Numbers from a Seed

One approach could be to apply some crazy mathematical formula to the seed, and mangle it enough so that the output number seems unpredictable, and then use that as the seed for the next iteration — but what should this ‘mangling’ function look like?

Let’s try experimenting with this idea, and see where it gets us. This mangling function will take one value and return another one. Let’s call it R .

R(Input) -> Output

Let’s start off with R being a stupidly simple function. R will simply add 1.

R(x) = x + 1

If our seed value is 1 , R will generate the series 1, 2, 3, 4, … . Not random at all is it? We’ll get there. Let R add any constant c instead of 1 now.

R(x) = x + c

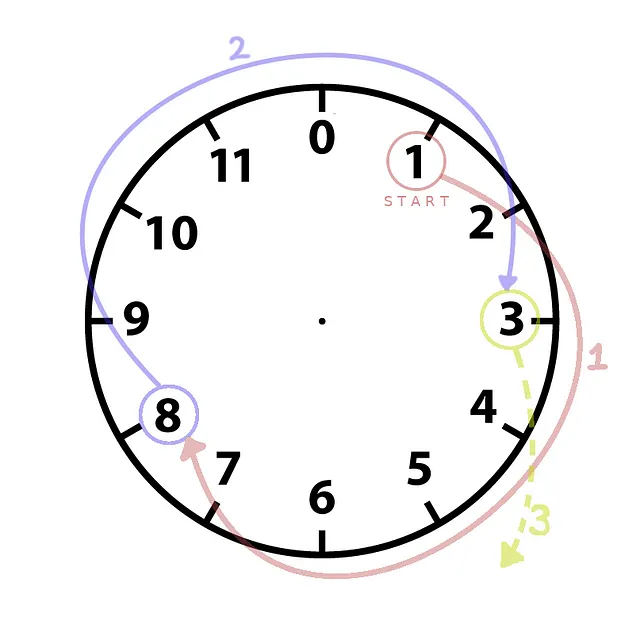

If c is say 7 , we’ll get the series 1, 8, 15, 22, … . We still haven’t gotten very far. One obvious property that we’re missing is the fact that numbers shouldn’t always be increasing — they should be scattered about the rang e. We need to make our number line lead back onto itself so that it will produce smaller values too — a number circle !

Consider a clock face — our number line starts at 1, and wraps around at 12 (or 0). Since computers are more comfortable with math that begins at 0, let’s assume clocks have 0 at the top instead of 12.

1 8 3 10 5 0 7 2 9 4 11 6 1 8 ...

Progress! We observe that after 12 numbers, our series continues to repeat, no matter what number we choose to start at. This is a very important property to keep in mind — if our cycle has n elements, we can only generate a maximum of n elements before our sequence repeats.

Let’s revise our R function to match our logic. Limiting the cycle length is nothing but the modulus or remainder operator.

R(x) = (x + c) % m

At this point, you’ll also observe that some numbers are inherently bad choices for c . If c was 4 and we started at 1, our sequence would be 1, 5, 9, 1, 5, 9, 1, 5, 9, … which is a horrible sequence! Its not random at all! Its clear that the numbers we choose for the length of the cycle and the number we choose for the jump c must be related in a special way.

If you try this out for a couple of values, the property is easy to see — m and c must be relatively prime, also called co-prime.

Till now, we’ve considered jumping numbers by addition, but what about by multiplication? Perhaps we can multiply x by a constant, say a .

R(x) = (ax + c) % m

The properties that a must obey so that we produce a full cycle are a little more specific than for c . In order to produce a valid cycle of numbers,

These properties, along with the rule that m and c must be co-prime, constitute the Hull-Dobell theorem¹. The proof of this theorem is beyond the scope of this article. You could of course, come to the same conclusions if you took a bunch of values for the different constants and see what you get!

Deciding the seed

One thing we haven’t addressed yet, is the issue of deciding the initial seed. We could fix it to some constant number. This is useful in cases where you need random numbers, but they have to be the same every time the program is executed — generating the same map every game for example.

Another way to decide the seed is to fetch it from a source that is different each time the program is executed — like the system time. This useful in a case where a general random number is needed, like a program to simulate a dice roll.

Our End Result

Applying a function on its result over and over like we’re doing is simply a recurrence relation. Let’s define our formula as a recurrence relation.

The final relation looks like this:

x(n) = (a * x(n-1) + c) % m

Where x0 — or the first starting value of x — is the seed, a is the multiplier, c is the constant to add, and m is the modulus.

What we’ve made here is called a Linear Congruential Generator, or an LCG. These are popularly used, because the calculation is very fast and they’re simple to implement.

Different languages and compilers have different implementations of the LCG, meaning the values of these constants change. For example, the random number function in libc , the C standard library for Linux, uses m = 2^32 , a = 1664525 , and c = 1013904223 . A compiler like gcc would typically use these values.

Final Remarks

There are more complex generation algorithms out there, but the LCG is a simple and classic one, and is easy to understand as well. If you’d like to dive deeper, do check out the original paper by Hull and Dobell (linked below), which has elegant proofs for the LCG conditions.

Random number generation has abundant applications in computer science, and is especially important in cryptography.

That’s all for this article, thanks for reading!

[1] Hull and Dobell (1962) Random Number Generators