The Woes of Non-Determinism

Naturally, measuring CPU time isn’t going to cut it. Machine code varies across architectures and operating systems, so of course the same code would take different amounts of time to run. Even on the same machine, factors like cache misses play an important role — enough to skew CPU time across executions of even the same piece of code. I needed to do something a bit smarter…

Motivation

I was faced with this problem while working on my project, Code Character. Code Character is an online AI challenge where contestants write bots to control an army in a turn-based strategy game. I wanted to limit the amount of code a contestant could execute in a turn.

My first thought was to simply time the code, but as we’ve seen, that strategy isn’t even deterministic, so a contestant submitting the same code twice could give completely different results. In fact, we tried implementing this solution, and the results varied so wildly that a contestant could actually lose and win — with the exact same code ! It ended up being extremely random, and we had to scrap the idea of using time altogether.

LLVM Bytecode

Since we couldn’t measure time, we decided to measure the number of instructions being executed instead. This means we needed a way to instrument the contestants’ code. If you’re unfamiliar with the term, instrumentation is the addition of code to some application so as to monitor any component of that application, like CPU usage or execution time. In this case, we needed to monitor the number of instructions a contestant’s bot executed. Naturally, we couldn’t expect contestants to instrument their bots themselves, so we had to automate this process behind the scenes.

We wanted to avoid the overhead of a runtime instrumentation tool as we would be running matches on our server, so something like PIN tool was unsuitable for our purposes. Instead, we decided to directly instrument the contestants’ code to count the number of instructions they would be executing. Rather than instrument binaries (the machine code), we decided to use Clang to compile our code and instrument LLVM bytecode.

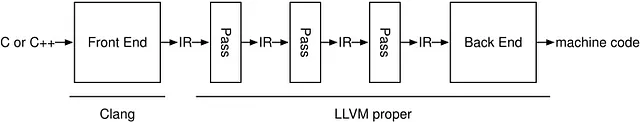

If you’re unfamiliar with LLVM, it’s ‘a collection of modular and reusable compiler and toolchain technologies’. One of their main projects is the LLVM IR and backend. In simple terms, LLVM has developed an Intermediate Representation that a compiler ‘frontend’ can compile code into. This LLVM IR is then compiled into machine code by LLVM’s backend. Thus, if you were to make a new language, you could decide to let LLVM handle machine code generation and optimization, and write a frontend to convert your language into LLVM IR.

The Solution

Simply counting the number of LLVM IR instructions in the code will obviously not suffice, as we need the number of instructions actually executed, not just the number of instructions in the code. To this end, we developed a simple algorithm that relies on the concept of a ‘basic block’ of code.

A basic block of code is a set of instructions to which the entry point can only be the first instruction, and the exit point can only be the last instruction. To get an intuition for this, try dividing any piece of code into contiguous sets of instructions in which there’s no branching out (ifs, loops, returns) save for the last instruction in a set, and no entry possible (first instructions in a function, loop or an if/else block) save for the first instruction in a set. The result is the set of basic blocks in the code — they’re blocks of sequential code that can simply be executed without having to make any decisions about what instruction to execute next.

Why don’t we try doing this right now? Here’s a snippet from the sample code provided to contestants of Code Character:

Using the fact that a basic block only has one entry point (its first instruction) and one exit (its last instruction), we can chop up the above snippet into these basic blocks:

While this helps us get an understanding of how basic blocks work, the algorithm we used in Code Character uses LLVM IR, which looks like this:

If you look closely, you’ll notice that the above snippet is the first three blocks of our C++ snippet compiled to LLVM IR (each <label> line is the start of a basic block).

LLVM has libraries that allow us to write ‘passes’ - code that can be run on LLVM IR. The LLVM API allows us to easily read and analyze LLVM IR by iterating over modules, functions and basic blocks, and even modify the LLVM IR before compiling it into machine code.

Now that we have these basic blocks and the LLVM API, it becomes simple to count the number of instructions being executed using this simple algorithm:

Our instrumented IR will look like this:

As you can see, a call to IncrementCount is made at the end of every basic block right before the last instruction. Using the static int that IncrementCount operates on, we can obtain the instruction count at the end of every turn of the contestant. This count is deterministic and cross-platform as well, as the same code is guaranteed to generate the same LLVM IR code if we use the same compiler version and compiler flags on the contestants’ code.

Conclusion

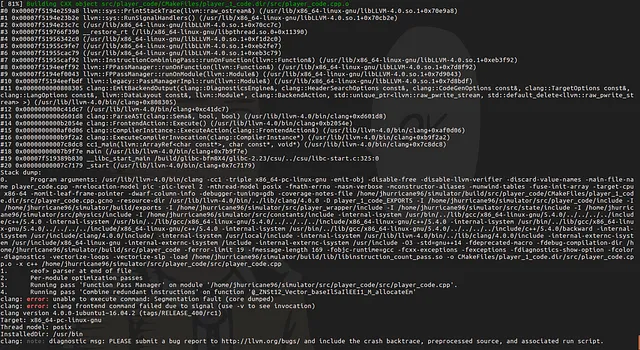

It turns out that profiling code is not the simple matter I once foolishly thought it was. In the process of working on the deceptively simple task of timing code, I ended up reading a fair bit on how compilers work and about LLVM passes. If you’re interested in code generation and want to write your own LLVM pass, LLVM itself has provided a tutorial on how to get started. There’s also this excellent blog post that I used while writing my own pass. Since the LLVM API is not backwards compatible across major versions, be careful about which LLVM version you’re using.

The final LLVM pass code used is here.